aerial-mapper

Overview

- Load camera poses from different formats (such as PIX4D, COLMAP)

- Generates a dense point cloud from raw images, camera poses and camera intrinsics

- Generates Digital Surface Models (DSMs) from raw point clouds and exports e.g. to GeoTiff format

- Different methods to generate (ortho-)mosaics from raw images, camera poses and camera intrinsics

Package Overview

- aerial_mapper: Meta package

- aerial_mapper_demos: Sample executables.

- aerial_mapper_dense_pcl: Dense point cloud generation using planar rectification.

- aerial_mapper_dsm: Digitial Surface Map/Model generation.

- aerial_mapper_google_maps_api: Wrapper package for Google Maps API.

- aerial_mapper_grid_map: Wrapper package for grid_map.

- aerial_mapper_io: Input/Output handler that reads/writes poses, intrinsics, point clouds, GeoTiffs etc.

- aerial_mapper_ortho: Different methods for (ortho-)mosaic generation.

- aerial_mapper_thirdparty: Package containing thirdparty code.

- aerial_mapper_utils: Package for common utility functions.

Getting started

Output samples

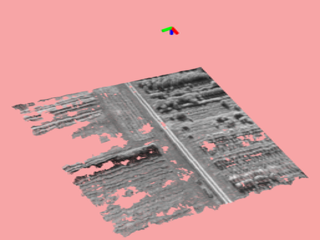

| Dense point cloud (from virtual stereo pair, 2 images) |

Digital Surface Map (DSM, exported as GeoTiff) |

(Ortho-)Mosaic (from homography, 249 images) |

|---|---|---|

|

|

|

| Raw images | Dense point cloud | Digital Surface Map |

|---|---|---|

|

|

|

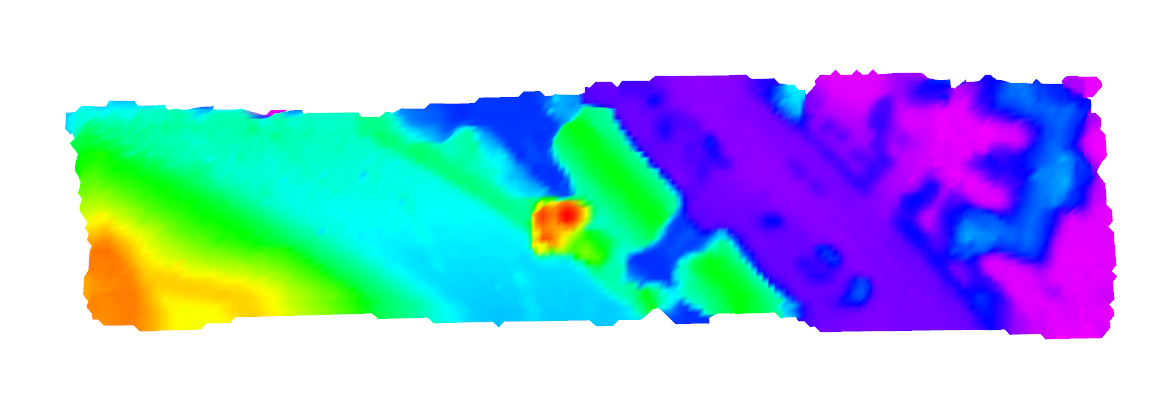

| Observation Angle (red: nadir) |

Grid-based Orthomosaic (Cell resolution: 0.5m) |

Textured DSM |

|

|

|

Publications

If you use this work in an academic context, please cite the following publication:

T. Hinzmann, J. L. Schönberger, M. Pollefeys, and R. Siegwart, "Mapping on the Fly: Real-time 3D Dense Reconstruction, Digital Surface Map and Incremental Orthomosaic Generation for Unmanned Aerial Vehicles" [PDF]

@INPROCEEDINGS{fsr_hinzmann_2017,

Author = {T. Hinzmann, J. L. Schönberger, M. Pollefeys, and R. Siegwart},

Title = {Mapping on the Fly: Real-time 3D Dense Reconstruction, Digital Surface Map and Incremental Orthomosaic Generation for Unmanned Aerial Vehicles},

Booktitle = {Field and Service Robotics - Results of the 11th International Conference},

Year = {2017}

}

Acknowledgment

This work was partially funded by the European FP7 project SHERPA (FP7-600958) and the Federal office armasuisse Science and Technology under project number 050-45. Furthermore, the authors wish to thank Lucas P. Teixeira from the Vision for Robotics Lab at ETH Zurich for sharing scripts that bridge the gap between Blender and Gazebo.